Q & A for 3/16/23 ChatGPT for OSINT Webinar

Earlier this week SANS hosted a one-hour Webinar "The New OSINT Cheat Code: ChatGPT". You can watch the webinar and view the slides here: https://www.sans.org/webcasts/new-osint-cheat-code-chatgpt/

I used up the entire hour of the allotted time giving the presentation so I promised that we would gather the questions and I would answer them on my blog. I haven't edited these for grammar etc, just pasted in the questions and responded to them.

Q:

If people were proficient in "google dorks" - to uncover whatever they may find. How does the landscape shift if future iterations of ChatGPT adapts to the majority of population's search as it comes to understand what people are seeking out.

A:

I can easily see “Google dorks” becoming less important in

the future as the focus shifts towards writing quality prompts explaining

EXACTLY what you’re looking for and letting that specificity improve your signal-to-noise

ratio. I’m picturing an enhanced user experience with an increased dependence

on AI. There will undoubtedly be concerns about this since it could so easily

be used to influence users.

Q:

I’ve heard that in some cases it will make up a citation -

is that something you have seen?

A:

I haven’t seen a ton of it but it 100% happens. On a recent happy

hour with my friend and fellow SANS Instructor Mick Douglas, he shared to

examples of this that he had seen. In one instance where he was using ChatGPT

to write some code, it utilized a function that sounded extremely interesting.

When Mick went to research the function, he found it never existed. The crazier

example was when Mick was having ChatGPT document some code, it put a comment in

describing, accurately, what a function did, and giving a link to a blog post

where a user could learn more about the capability. When Mick went to research

the blog post, it didn’t exist, and he could find no evidence of the blog itself

having ever existed.

I think things like this will become less common and the

model is trained further based on feedback from humans, but when they do happen,

they’re fascinating.

Q:

I read on HackerNews that GPT 4 has effectively killed

jailbreaking this morning, so I'm not sure how much longer jailbreaking will

work... :'(

A:

To quote Jeff Goldblum from Jurassic Park: “Life, uh, finds

a way”.

Q:

How do you envision cybercriminal actors exploiting ChatGPT

(other than drafting phishing email messages)?

A:

This is an extremely scary question to think about. Think of

how much better at conversation ChatGPT is now than many humans pulling off scams. Automating an intelligent scam

artist chatbot and turning it loose may be the new hot passive revenue model we

see blowing up YouTube finance influencers 😊

Think of how Whisper provides the capability it does on a

local system. I don’t think we’re far off from ChatGPT capability running on

our system in a way we can control. Facebook’s LLaMA model may be a big step

towards that. If we get that type of capability on a local system, the sky

would seemingly be the limit. Can we turn off its moral limitations? Can we

have it think up new online scams and carry them out while we sleep?? I’m fascinated

to see these answers and think we’re in for a wild ride.

ChatGPT can already find security vulnerabilities in code,

how long before that’s weaponized, if not already. Sock puppet and deep fake

creation at scale etc. “Hey ChatGPT, write me some secure ransomware malware”..

Wild ride.

Q: What are the privacy

concerns when using chatgpt

A: Similar to search engines in many ways. I’ve worked in

environments where there was information too sensitive to Google, and the same

would apply to ChatGPT since there are always questions about how data is stored,

handled and who has access to it. The same way Google could build an unbelievably

accurate profile about it’s users if it wanted to, a user’s “ChatGPT” of choice

could likely do the same given enough usage over time.

ChatGPT tells you not to paste secret keys in when you’re

asking it to write code, but you know there are plenty of people who do. At

some point, it seems likely that some inadvertent disclosure of sensitive

information will occur. We’ve seen it make up facts to sharing things it

shouldn’t seems kind of inevitable.

Q: How often does it give you

low-quality or bogus links?

A: Similar to the “making up facts or citations” question, it

happens, but I haven’t had much of it. To be fair, I haven’t asked it for

actual links THAT often so the problem could be more common than I’m giving it

credit for.

Q: Do you find that the links

aren't always accurate? I ask for the url for everything , however I have

noticed many times the URL is no longer working or the video has been taken

down.

A: Sometimes yeah. It will be SO nice when it can access the

live internet and you can have it check its answers.

Q: Where can we find the Obi Wan Kenobi example? So good.

A: https://www.reddit.com/r/ChatGPT/comments/11dv3vy/chatgpt_jailbreak_i_found_today_can_be_used_for/

Q: How does it do on

debugging and unit-testing the code it writes

A: THIS is a loaded question!!!! (I kid)

There have been times it’s amazed me, and times I wanted to

smash my keyboard.

I think it’s definitely better at some languages (Python)

than others. With Python code it kinda reminds me of the early days of using a

GPS. It would take you across the town or across the country with no issues, but

you were on your own for the last 100 yards or so. It would get you near the

parking lot but not in the parking lot. With Python I’ve had code I’ve only had

to make very minor tweaks too, and some that were definitely worse. When I had

it help me write an Excel macro recently, that was an exercise in frustration,

but I eventually got it.

Try to be specific with versions of software etc. that you’re

using, and you can always try feeding it back errors you receive to see if it

can adjust. The “safest” way is to start off with very simple code and slowly

add in functionality. That way you can check your code for errors along the way

and adjust it if need be.

Q: Many companies are

blocking access to ChatGPT, thinking staff could accidentally share PI, etc...

I think this will change as more people understand the use cases? Thoughts?

A: “Throwing the baby out with the bath water” springs to

mind, also the chuckles as people are typing the same sensitive information

into Google…

With Microsoft coming on board the “private” ChatGPTs are inevitable,

and will likely be very profitable as businesses throw money at the problem for

a slight reduction of risk. (Just my opinion).

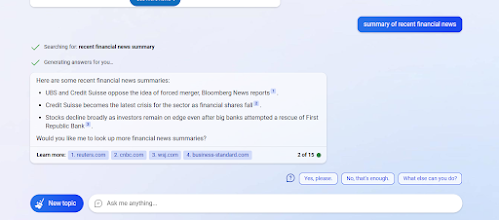

Q: With respect to live web data and OSINT, could Matt speak

to what he thinks about Bing Chat for OSINT, since it’s using GPT-4 as its base

model but with the ability to search Bing’s index?

A: When GPT4 launched this week Microsoft did indeed say that Bing has been using a modified GPT4 model for it’s results for the past several weeks. I haven’t seen a ton of difference in the results, but the new “chat” functionality has incredible potential.

I haven’t seen it do anything super amazing yet (It won’t provide financial advice etc. currently). But it’s easy to dream about what ChatGPT with internet access will morph into over the next few months.

Q: Does Whisper work locally

or is your data sent to an unknown data center?

A: The Whisper models are available to be downloaded and run

offline. The code is at: https://github.com/openai/whisper

I sense a blog post in my near future where I go through the

steps of how to do this in a VM.

Q: Do we know whether

ChatGPT is doing technically accurate work or if it's just doing educated

guessing?

A: I think that would vary greatly on the type and

complexity of the question. For simple questions, I would have a fairly high

degree of trust in the results. If the question is on a top where the model’s

training data is biased or incomplete, it may generate less accurate or misleading

information. The good news is that as all models get more and more training

data, we should see the already impressive results improve even more.

Q: Does Matt have any examples of where ChatGPT can be

useful in the the security risk space? Examples being planned protests,

geopolitical instability, sourcing from websites in foreign languages, with a

capability to assign credibility based on multiple-source reporting? In short -

supplemental support via AI-based tactical security analyst functionality.

A: As someone who spent many years being responsible for persistent

monitoring, I have an irrational love of this question.

Right now I think the big help is in writing code to

automatically gather information. But we may be literally weeks away from

having functionality very similar to ChatGPT running on our local systems. At

that point, you let your imagination run wild. Look at my quick “putting it all

together” section at the end of this week’s webinar.

Imagine you’re responsible for monitoring news from Belarus.

Identify local, relevant news sources from the region, have some ChatGPT-created Python code scrape the websites, and have the local models analyze the

text and provide summaries and potentially emerging trends and warnings. If you

really want to blow your mind, imagine competing hypotheses for indications a

specific type of activity may occur in the near future. In addition to daily summaries

of news from a region, you could create two acritical analysts. One argues why

these events will soon occur, while the other argues the counterpoint. We’re SO

close.

Q: how do you get around the character limitations within

chagpt to handle analysis for data this size?

A: Right now, you kinda don’t. The new GPT-4 API has

increased this somewhat, but the increase comes with an increase in cost. The

size limitation frustrates me more than ChatGPT’s lack of internet

access. But as I stated earlier, we’re SO close to having similar

capabilities hosted on our local computers.

Q: Apologies if you mentioned it, but is this the GPT-4 version

or the 3.5?

A: All of my screenshots from the webinar were 3.5

Q: Do you need a login for ChatGPT? If so, do you recommend

not using a personal/work email or info for that login when working with

possibly sensitive info?

A: I use my “real” email address for it, but you could easily use a sock puppet account.

Comments

Post a Comment