Leveling Up Your OSINT Game: Creating a Professional Email on a Budget

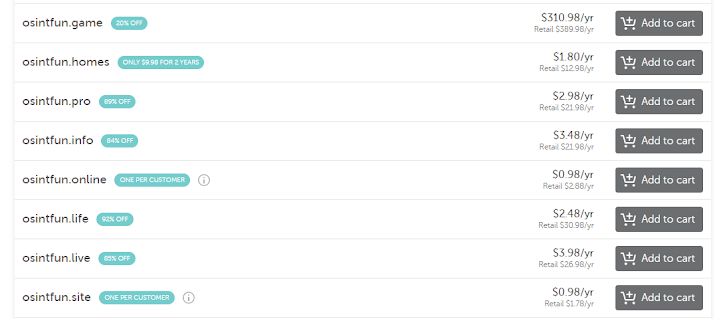

Some sites do not allow new users to register with a “free” email such as gmail.com or outlook.com. This is especially true if a site offers some free capability, but with the hope of converting you into a paying customer. Most OSINT practitioners would prefer to avoid using their “real” work email to signup for services, so the easiest solution is to register a domain (if you don’t already have one) and setup an email account using that. In this blog post we’ll explore how to accomplish this task as easily, and inexpensively as possible. Step 1: Acquire The Domain Many of us have likely purchased a .com or other domain over the years. Maybe you still have some, or maybe they expired. For years the only domain registrar I used was Google Domains. It was easy, inexpensive and provided free private registration to help enhance privacy. Recently, Google decided to get out of the domain registration business and is in the process of transferring all registered domains to Square...